On chatGPT for education

The yes, the no, the maybe

I can admit when I’m wrong. In my most optimistic of moods, I think that 2023 might be the year we ditch adversarialism. On a global scale, it’s been fairly toxic and makes us all smaller, but in education, I think the “I’m right, you’re wrong” approach helps nobody, least of all students. I loved Greg Ashman’s intentionality in this post where he argues for pragmatism and actions that make a difference, and the importance of ensuring that performative dialogue that leans on vague culture-wars ideology is challenged in favour of good faith reasoning.

In this spirit, I partially take back what I originally said about AI chatbots. Partially.

I still think if your assessment is so limp that students can use AI to scrape a pass, then this needs to be looked at. I have revisited and refined my essay instructions several times and I’m still not satisfied with what chatGPT can do. On the other hand, with a bit more free time, I have been testing the limits of this new tech. I’m not as quick to dismiss it as I originally was. I’m sure there are people out there who have done a far better job than me in this regard and of course, I would love to hear about your experiences in the comments. I’m going to start my critique with what I think chatGPT can’t do.

Lesson plans

My main issue with this lesson plan, and the ones I have seen posted online as great time-savers, is not that everything is terrible but more that a novice teacher might not be able to filter what is sound and what is not great practice.

Objectives

The first objective is ok, but I think the teaching of context would take about a week. There’s no way analysis would come into this until far later in the unit. Novice teachers need to develop their understanding of how long things actually take to teach!

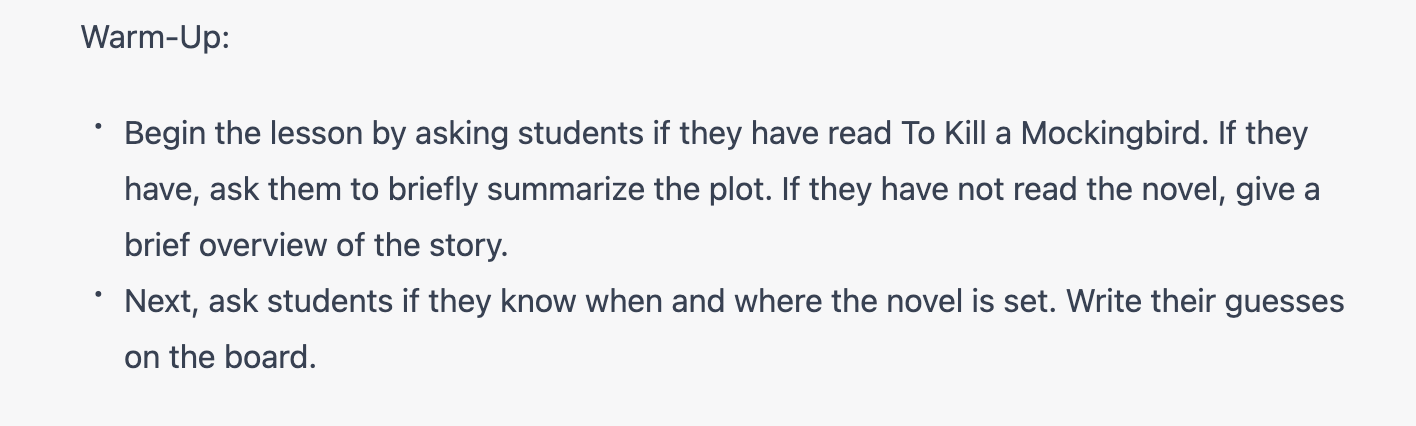

Warm-up

Aside from not being sure what the purpose of a warm-up is (like push-ups?), in practice, this would probably sound like a few minutes of dead air, followed by me writing nothing on the board. I’m not a fan of guessing, but I suppose I would save some whiteboard marker ink. Diagnosing prior knowledge is a good idea, especially for gifted students who might bring a lot to the classroom, but I think this kind of activity, without any kinds of prompts, cues or specific questions would lead to 5 minutes of class time that students are never going to get back.

Direct instruction and guided practice

Here I can feel the chatbot really trying to frame its suggestions around best practice. The content suggestions are a good starting point for a new teacher. And the underlying assumption here is that teachers will add the flesh to the bones. It could add a suggestion about what students are doing while receiving this instruction. The main problem I have with these lesson plans (and I have seen several like this for various subjects) is the reliance on student research, despite the label of guided instruction.

What is it we are guiding when we tell students to just Google their learning? What exactly are students practising? I read Paul Kirschner’s Urban Myths the other day — the one about students as digital natives rings true here.

Vocabulary

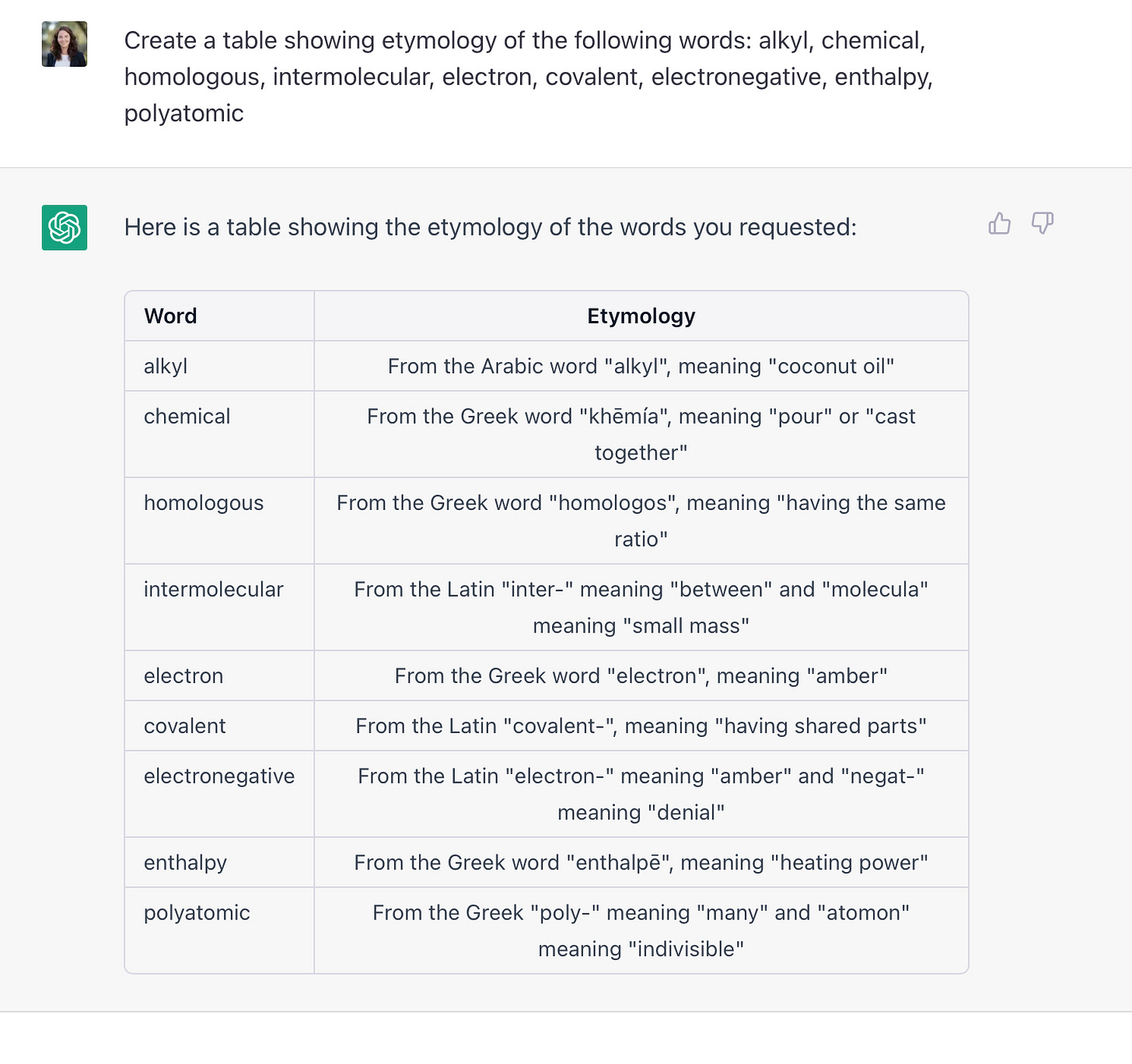

Now on to what chatGPT and OpenAI can do. I’ve always had pretty patchy knowledge of etymology. I tend to remember only a handful of trivial but fun prefixes and suffixes, or ones that are so common a primary school student would probably know them. I’m also fairly rubbish at making vocabulary instruction exciting, so I’ve been thinking of making more effort this year by using Alex Quigley’s SEEC model.1 Imagine my unbridled delight — yes, my family have to put up with me often in unbridled mode — when I discovered that chatGPT would create an etymology table.

I've included one here for chemistry, and in another iteration, I just cut and pasted the Stage 5 Science glossary and asked for terms, definitions and etymology. Teachers could create a resource like this from scratch, but I estimate it would take at least 15-30 minutes.

Study timetables

I can’t tell you how many times students have asked me to create study timetables for them. Like an idiot, I always say yes - I mean some of these students are old enough to drink and vote. Now chatGPT can do it for them! I popped in some fairly convoluted requirements — even down to unit weightings — and it came up with a 7-day timetable. I’m sure it could do some kind of Pomodoro-ing as well. I’ve included just a sample below and the subject weightings check out.

Marking

The jury is out on this one. I tried giving a grade scale out of 20, a minimum amount of quotes per paragraph and also consideration of lexical density. I thought the app was actually a bit harsh; I would say it was about two marks too mean. The exemplar included lucid, highly abstract and elegant phrases, with deep knowledge of poetic form. I’ve used that particular student exemplar with caution over the years, worried that it might demoralise novices as they approach the complexities of W.B. Yeats.

I’ve concluded that the app — and this is in line with its essay writing —doesn’t know much about quality of textual analysis. The comments were accurate but bland and not too helpful as student feedback. I do think it does a pretty decent job of ranking writing, so it would be interesting to use it as a moderation tool. I’m keen to chuck in a whole class group and ask it to rank the quality from best to weakest, providing a top and a bottom mark for it to chew on — gosh, I’ve been anthropo/zoomorphising it non-stop!

Other possibilities

Creating flashcard content (table form)

Creating revision questions

Creating a spaced retrieval timetable using syllabus dot-points

Creating essay questions using a rubric (you would need to edit, refine or talk to the bot)

Proofing-reading reports

Like anything, I think this will spawn some unexpected lethal mutations on both the teacher and the student side. And like any resource, it will always depend on teacher expertise to give it true value. I would say it’s resulted in a net loss of time for me so far, but this is likely due to my tinkering and testing. I think it has a huge capacity for everyday time-saving with two caveats:

Only with strong pedagogical and content knowledge will we know whether the products are worthwhile.

The old tech adage stands — garbage in, garbage out.

Let me know in the comments, or on Twitter or LinkedIn how you’ve whiled away (or clawed back) the hours with AI.

His new Substack is great by the way.

This is a very interesting piece. We do, I think, overestimate what AI can do. You’ve done a great job testing it. I’ve been doing something similar. It does have some benefits; it does have some drawbacks. It will be fun — and educational — exploring its limits and limitations!

Been having a few robust conversations (arguments) with my mates about this recently. All the “student” essays I’ve seen it write are good but the analysis is always the weakest part and this is with decent consideration as to what to input. If I was a student I’d start all my assignments with this now and then tweak to fill gaps. Saves time and a student’s worst nightmare - difficult thinking!